AI has been dominating the news cycle once again, and not just because Google unloaded an incredible number of new Gemini and Gemini-powered tools and AI experiences when the company hosted its annual I/O developer conference this week.

A day before the main I/O 2025 keynote, Microsoft’s Build conference also produced plenty of AI news. More interesting (and shocking) was OpenAI’s formal acknowledgment on Wednesday that it’s building its own hardware for ChatGPT.

OpenAI announced that it purchased Jony Ive’s new venture “io,” which has already designed a supposedly groundbreaking AI ChatGPT personal device that might launch as soon as next year.

OpenAI’s surprise announcement was a clear attack on Google’s increasingly impressive Gemini capabilities. There’s no denying that Gemini can do things ChatGPT can’t, and that’s thanks to all the proprietary Google apps that Google can leverage to turn Gemini into an even better, more personal assistant.

OpenAI also announced ChatGPT improvements for developers, which should allow them to build better, faster AI apps in the future.

In this context, OpenAI quietly updated the ChatGPT user interface without announcing or explaining the changes. You’ll be surprised when you see the new composer UI, but all the AI tools you’ve come to appreciate from ChatGPT will be there.

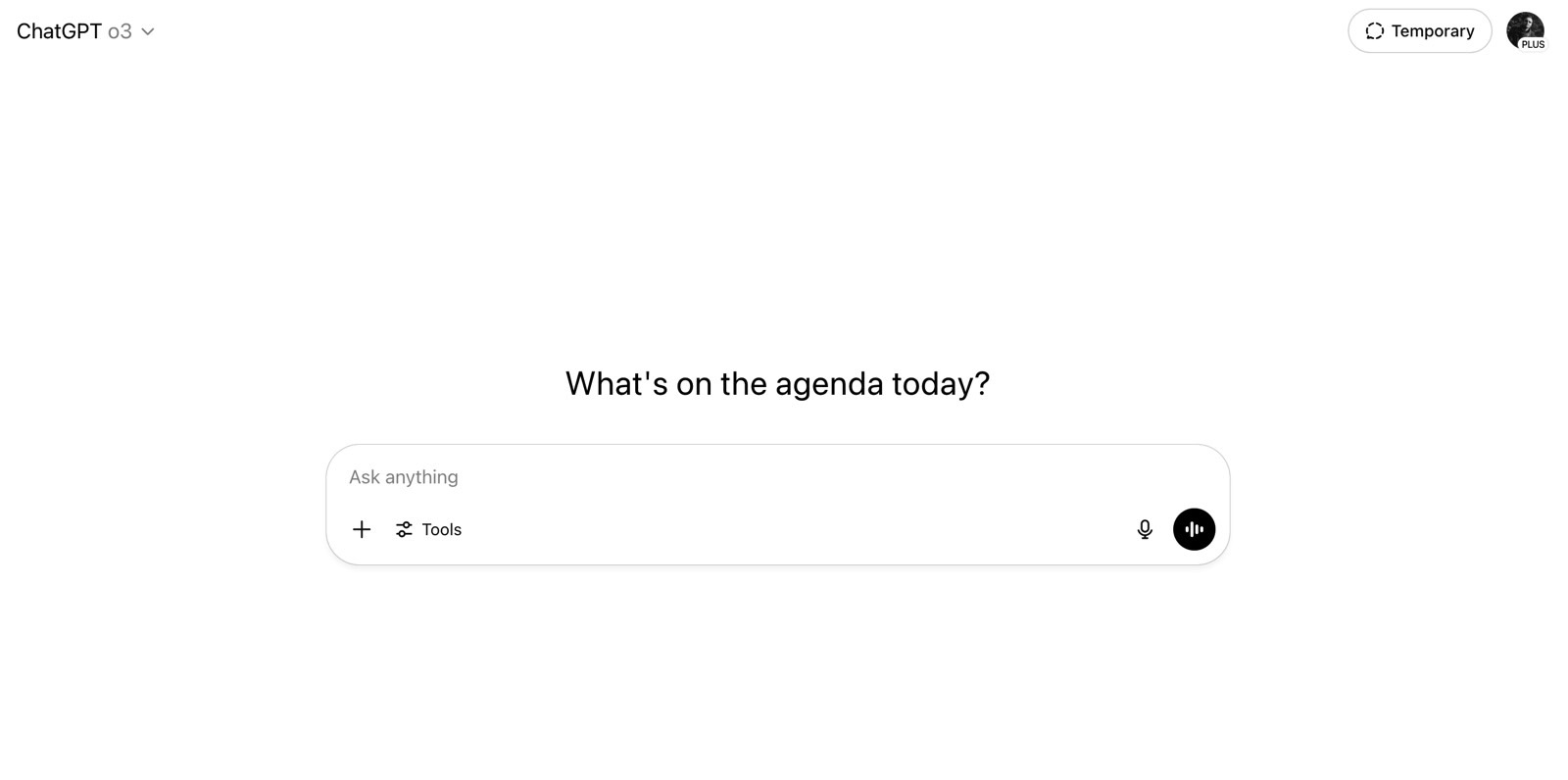

I was surprised to see the design change on Thursday, and you probably were, too. If you’re using ChatGPT regularly, you’ll know where all the menus are and what they do. Here’s what the ChatGPT composer UI used to look like a few months ago in the ChatGPT web app:

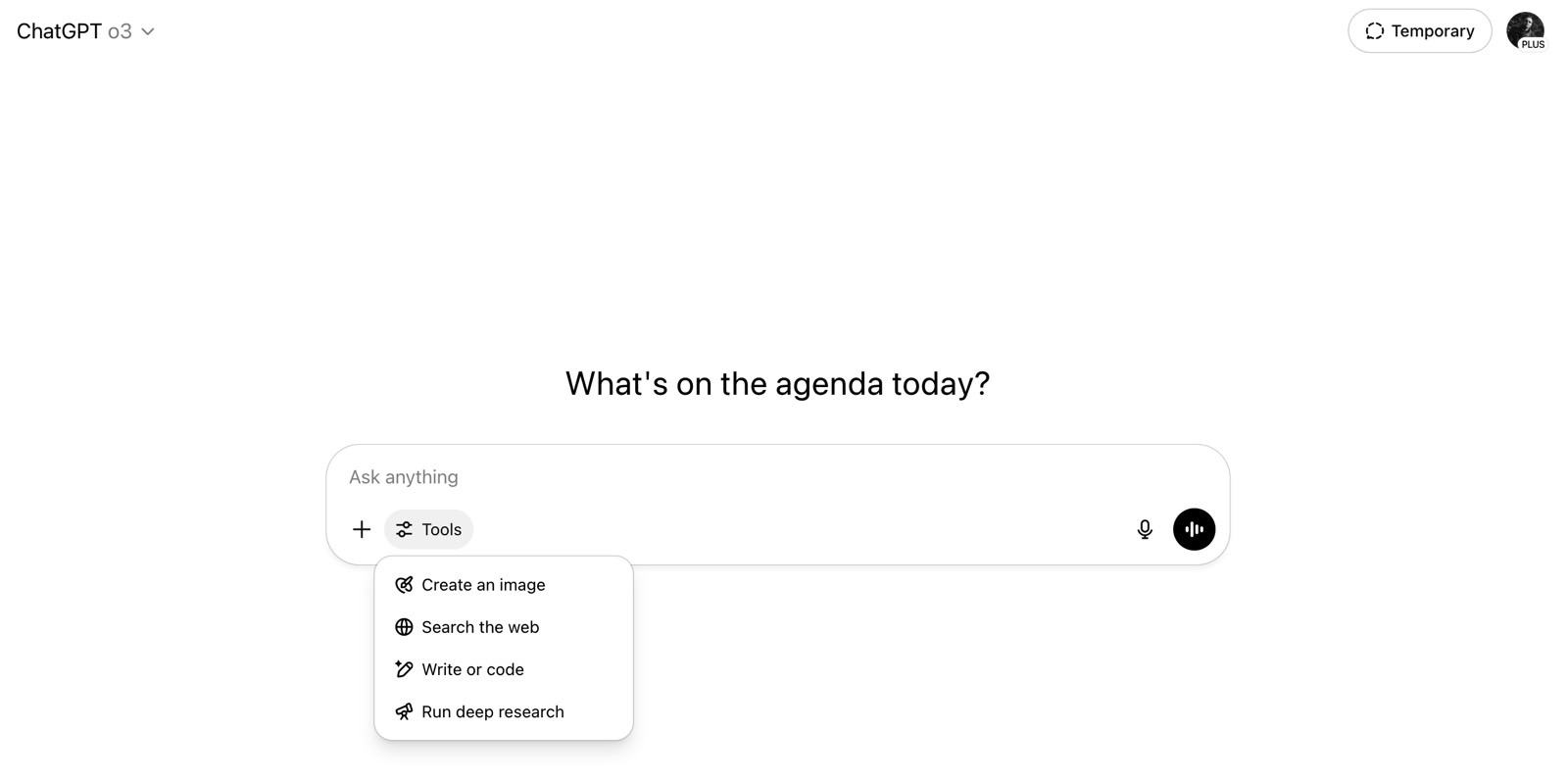

The same design was present until Wednesday, which is when OpenAI refreshed the composer with a simpler, cleaner user interface:

The menu items on the bottom are now packed neatly into a Tools menu next to the +.

Click the Tools menu to find all the ChatGPT tools you’ve been using lately. They have slightly different names, but it’s easy to understand what each does if you’ve been using a paid ChatGPT subscription for a while.

- Create an image (4o image generation)

- Search the web (ChatGPT Search)

- Write or code (Canvas)

- Run deep research (Deep Research)

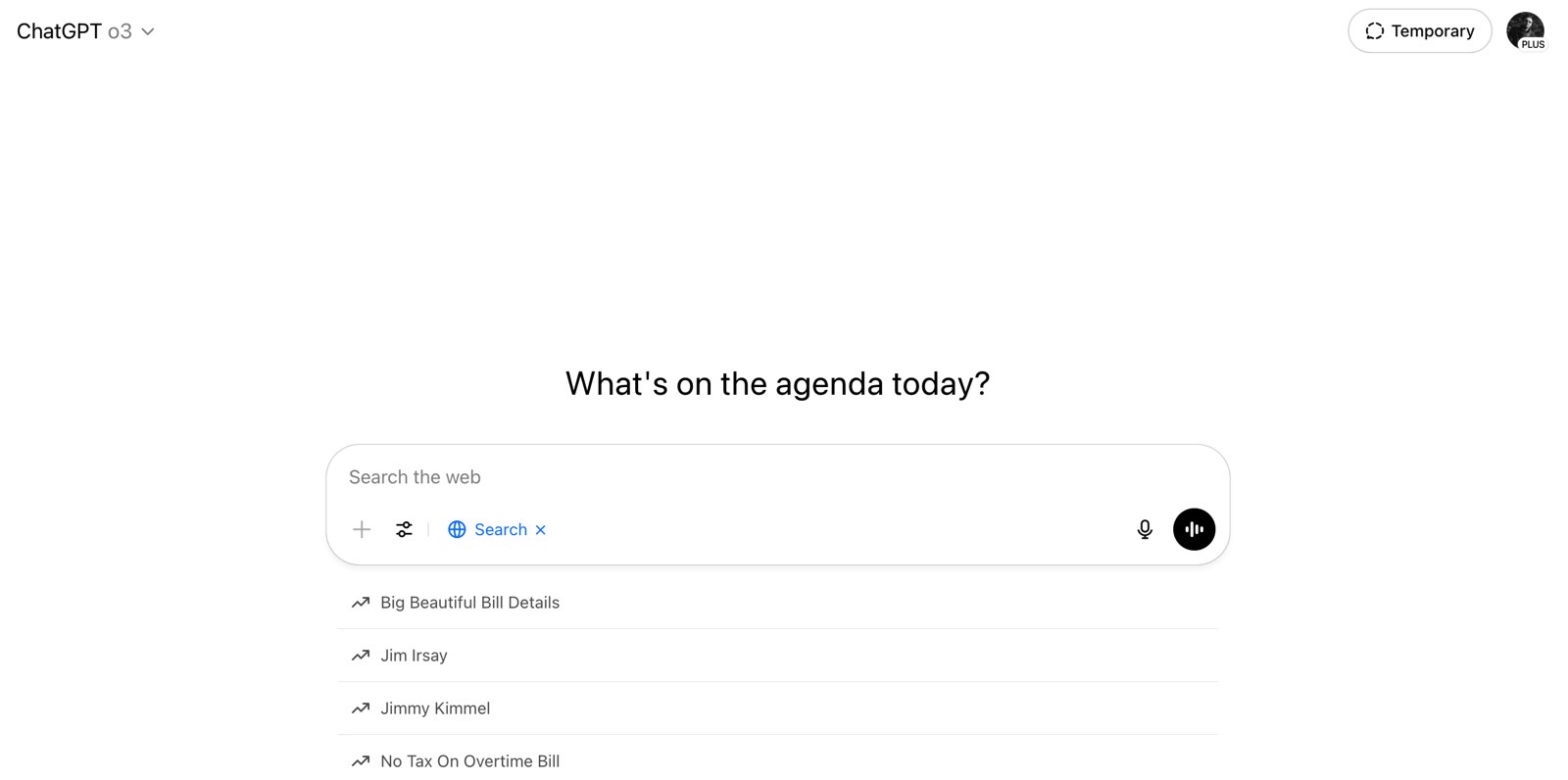

Select an option, and blue text will appear next to the Tools button in the Composer, reminding you which AI abilities you’re using. Here’s an example using the image generation feature:

Suggestions will appear under the composer if you need inspiration. You can always tap the x in that new button to stop using the tool.

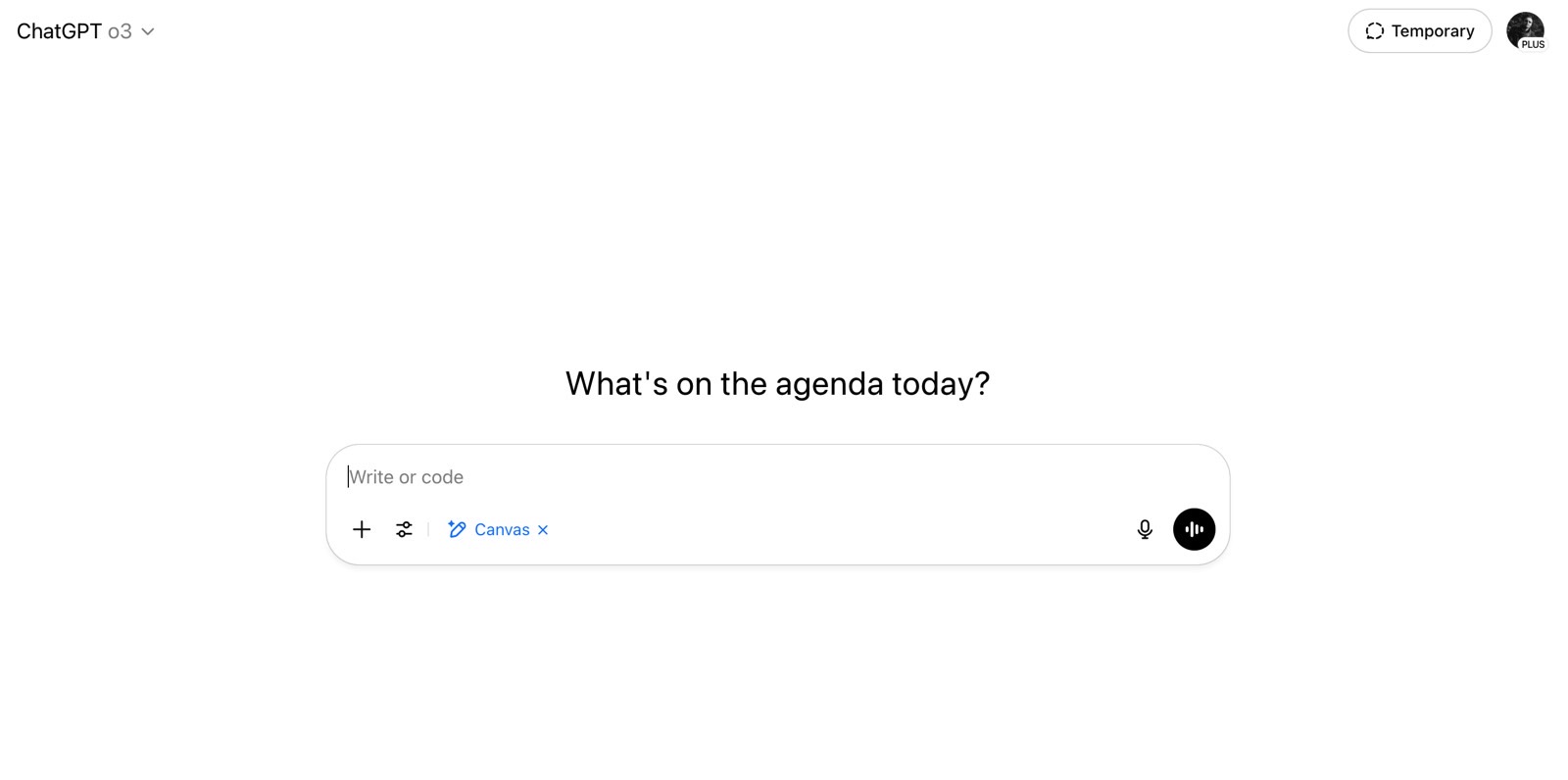

All tools behave similarly, as shown above for ChatGPT Search and below for Canvas.

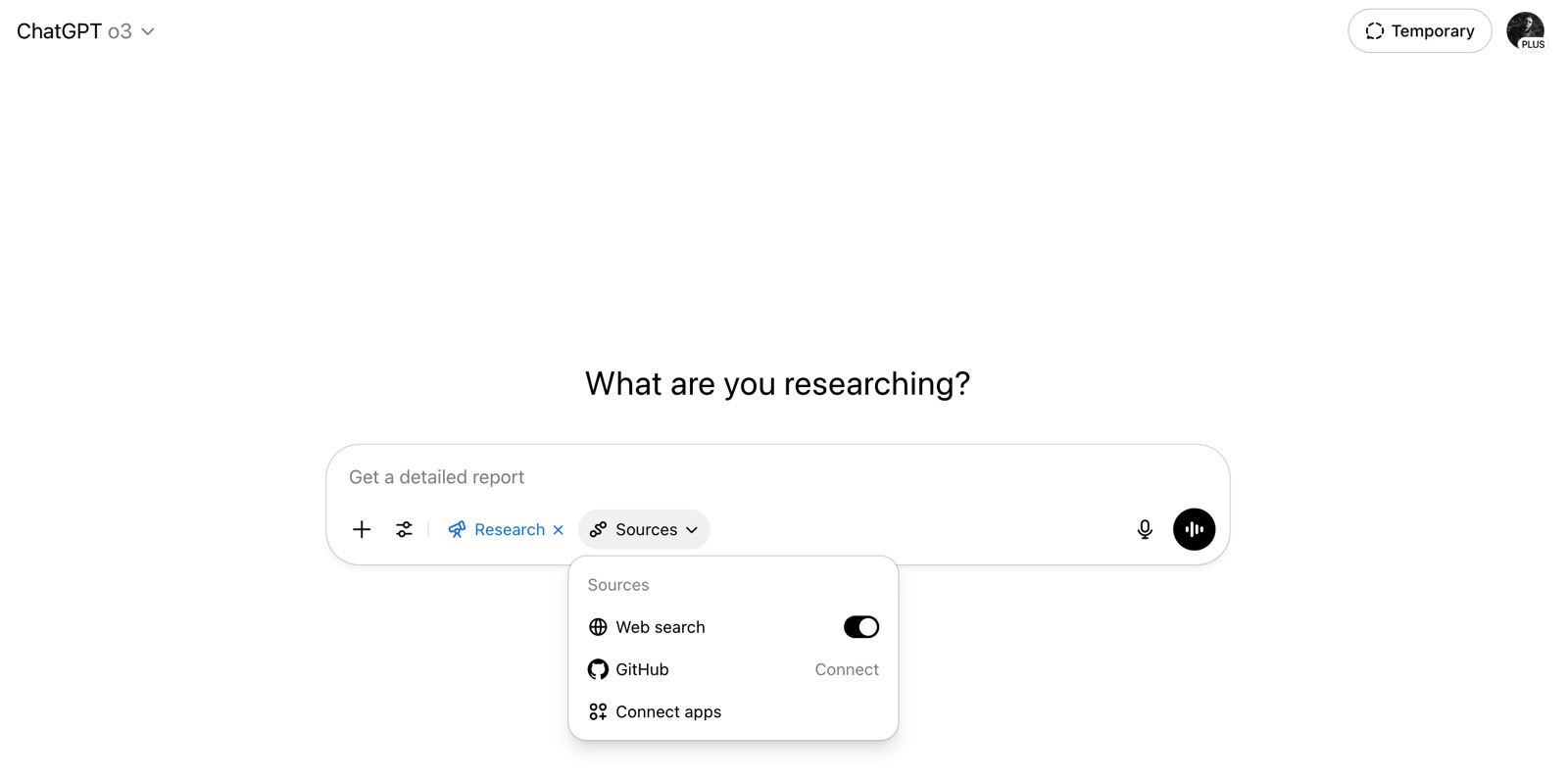

Tap the Deep Research button to get an extra menu that lets you customize the deep research experience. You can connect more apps, including GitHub, and switch the web search support on and off.

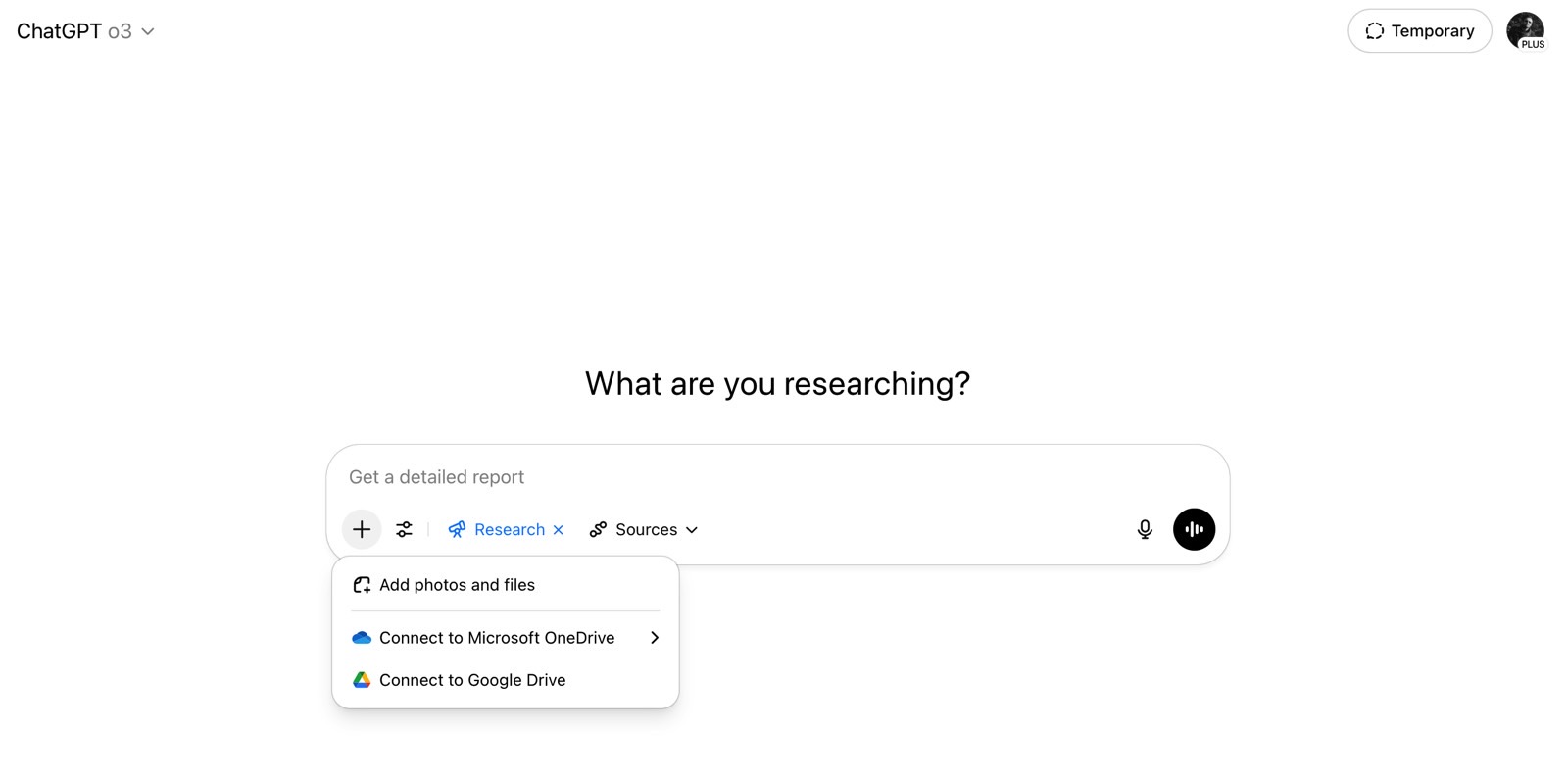

The + button offers the same functionality. It lets you connect to cloud services and upload files.

The dictate and Advanced Voice Mode icons are also present in the composer area, allowing you to interact with the AI by voice.

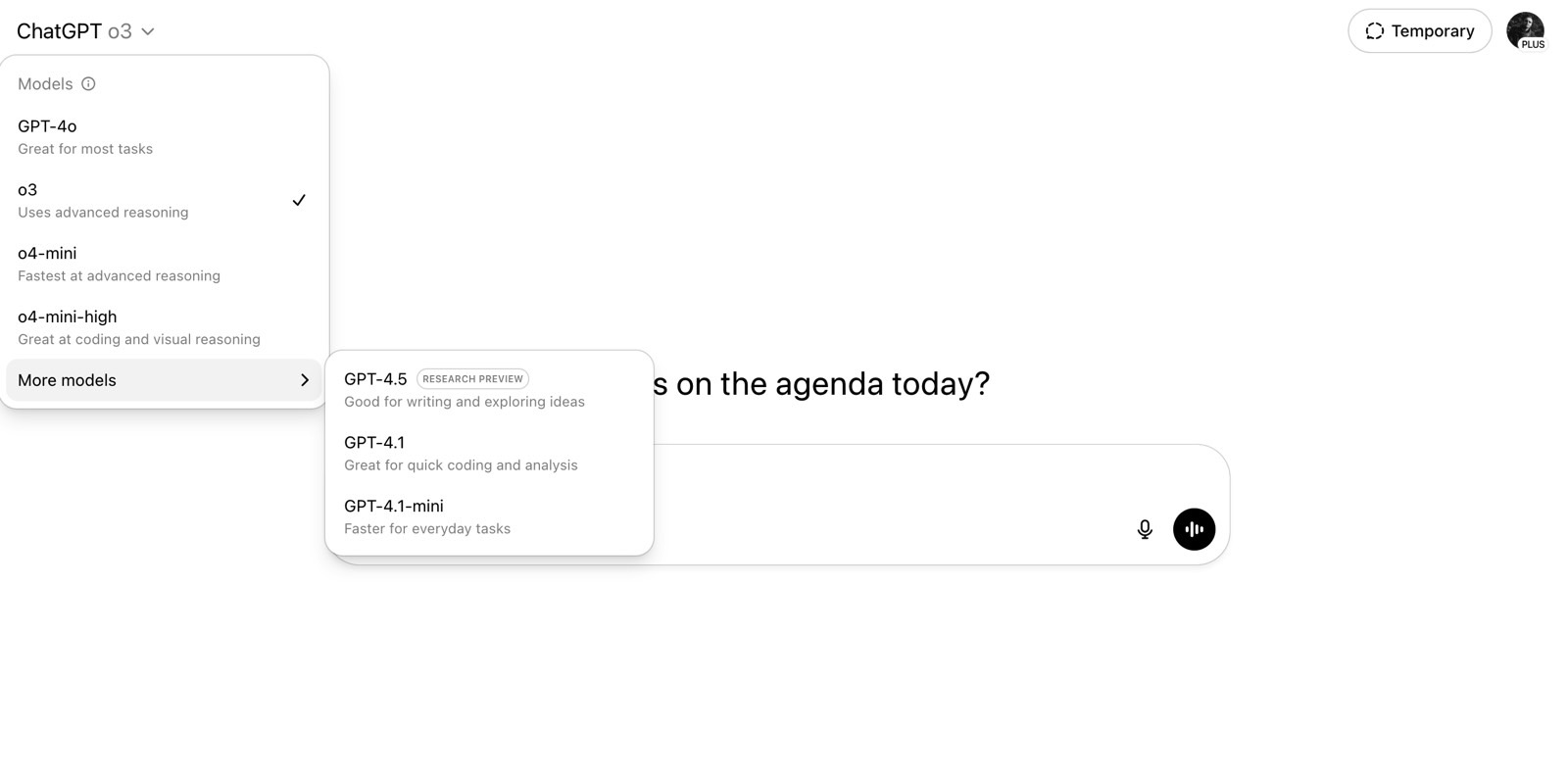

While we’re at it, I rechecked the ChatGPT models available under my Plus subscription, and nothing has changed since OpenAI brought GPT-4.1 to ChatGPT. And yes, the names remain confusing, especially if you’re new to ChatGPT.

The cleaner ChatGPT UI is now identical to that in the mobile version of the app, with one exception: The ChatGPT iPhone app doesn’t support Canvas.